Exfiltrating Data via Images and Why trained Models Aren’t Ready for Malware Core Integration

Background: Today’s cybersecurity landscape is full of different malware families, and one notable type is infostealers. Infostealers operate by executing a payload on the victim’s machine, collecting sensitive information, archiving it, and then sending it to a Command and Control (C2) server.

However, what if a Threat Actor (TA) wants to steal information without creating any noticeable spikes in activity or leaving significant traces on the victim’s machine? In such cases, they may leverage the webcam capability to gather information covertly.

Attack Scenario:

A threat actor may attempt to extract as much information as possible from a victim’s machine, including biometric data. This data can then be used for various malicious purposes, ranging from cyber espionage to fraud.

In the case of cyber espionage, the malware may remain dormant or inactive until a targeted individual is visibly present in front of the active machine, at which point it activates to collect high-value intelligence.

Exploit Development Stage:

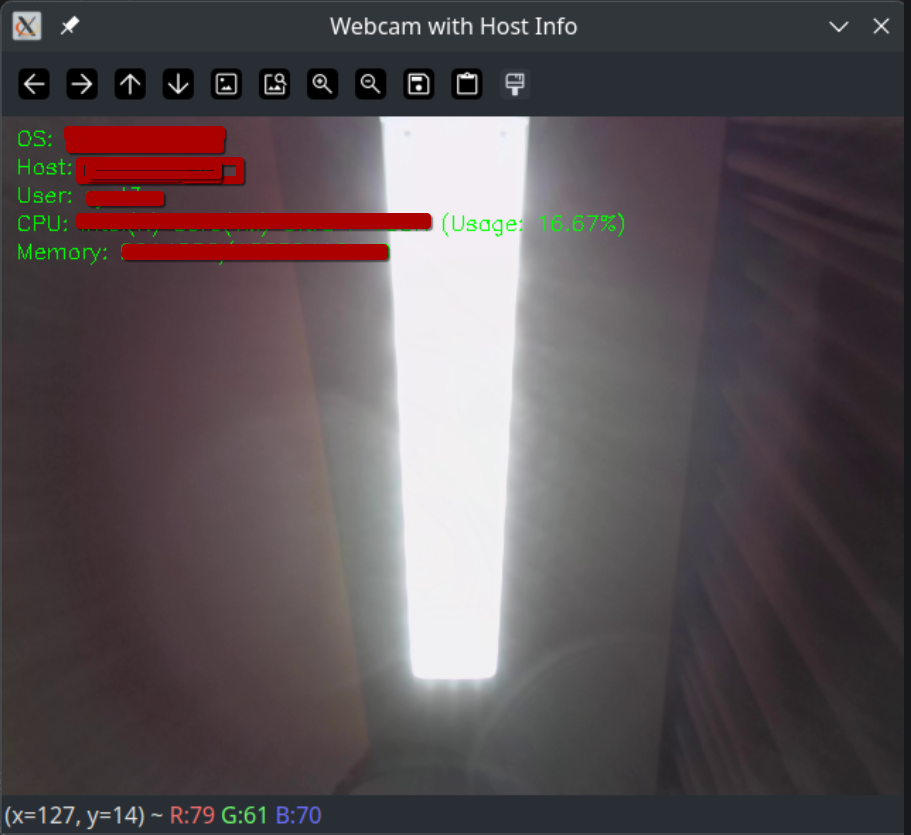

The malware needs time to activate itself and observe the environment in which it is operating. As a result, it can collect system information and other targeted data, capture a screenshot with the data in context, and then send it to the Command and Control (C2) server.

At the final stage, the malware should be able to recognize biometric data. As a result, it can trigger its hidden functionality. However, at this stage, the attacker needs to address two key challenges.

- The victim’s machine must have sufficient capabilities to support facial recognition.

- The attacker must consider the size of the offline-trained model, as the final compiled malware could become significantly large. From a delivery perspective, this would be impractical and could raise suspicion.

Data Exfilltration: As a final step, the attacker can use image hosting platforms to upload the collected data. This method can help evade detection and make the exfiltration process appear more legitimate.

Mitigation:

- Always maintain a robust privacy protection mechanism for your web camera.

- Restrict any application from accessing your webcam.

- Disable microphone access for your webcam at the operating system level.

Conclusion: At this point, my conclusion is that it is still too early for any trained model to be effectively implemented at the core of sophisticated attack malware, primarily due to the drawbacks mentioned above. However, I predict that in a few years, as consumer technology evolves to handle AI processes more efficiently, this approach will likely be adopted even in common attacks by various threat actors.